It’s big news, the new Googlebot 74. What does the new Googlebot 74 mean for SEO? Well for starters it’s long overdue for many SEOs and web developers. While Chrome has updated quite a bit, Googlebot has not followed suite. Creating the need for all sorts of hacks to make sure certain kinds of content was crawled and indexed. Half the reason technical SEO was a big thing now understood by many digital marketers.

What Is New For SEO From Googlebot 74?

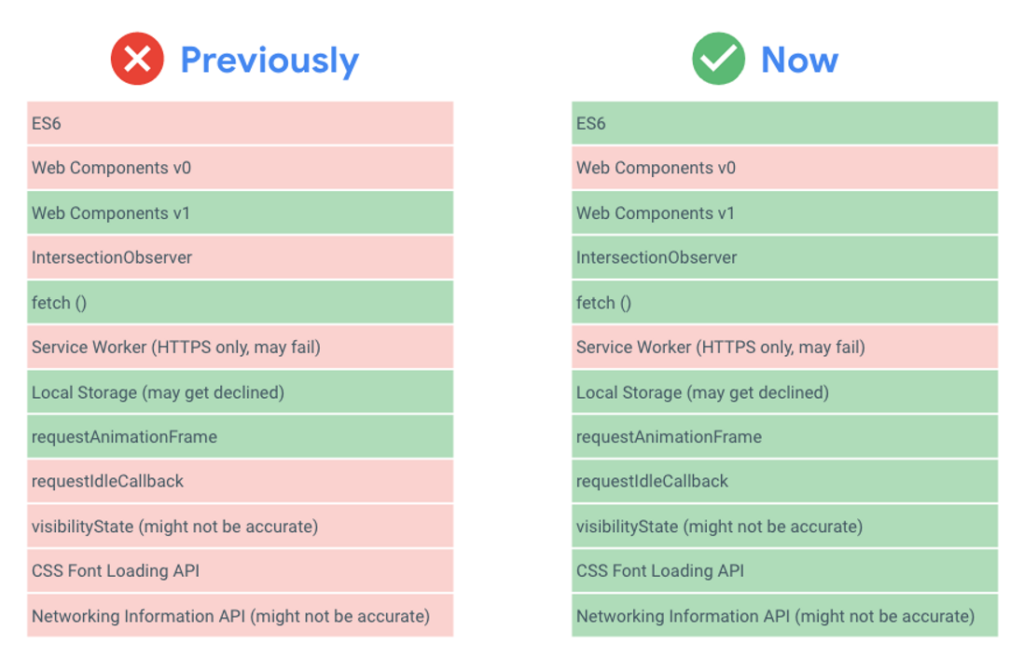

More than 1000 new features are supported with the release of Googlebot 74. Including ES6 & newer JavaScript features, IntersectionObserver for lazy-loading and Web Components V1 APIs. While the newly supported features are appreciated Googlebot still can’t do everything, so Google made a page for JavaScript issues with search. But to stay accessible with older browsers, the dirty fixes are still required.

Going forward now, Googlebot when rendering pages for Search, will regularly update its rendering engine to ensure support for the latest web platform features. So no more 4 year lapses in time between what Chrome browsers are capable of and what Googlebot can crawl and index. One thing to note, very good chance now that Google Search Console will need monitoring for any new crawl errors found!

What Was Wrong With The Previous Googlebot?

The older Googlebot wasn’t able to render most JavaScript and APIs, which was basically causing all sorts of workarounds which often didn’t go over smoothly. When you factor in crawl budgets and rate limiting, the picture of website indexing hurdles quickly comes to light. Too often SEOs and websites in general were forced to focus more on searchbots than users. There was also a 4 year or so gap, between the last Googlebot update and the last Chrome version.

Googlebot Still Has Room To Improve

There is of course always room to improve, and despite making a giant leap Googlebot can improve more. Currently Googlebot needs to do two waves of crawling to render JavaScript. So there are still chances to not have code crawled completely, and the delay of such will exist. So that needs to be considered and accounted for when building a website with JavaScript.

Pre-rendering and SSR ( server side rendering ) is still going to be a catch all to deploy, just to make sure no matter the user-agent, content is rendered the same. The main news to take away from all this is that more of the modern web is being rendered and indexed. Which means websites can focus 100% on the user experience when building and less on Googlebot, which is a win/win for all.

Does your website still have indexing issues? It’s Not Google…

Contact SEOByMichael and schedule an SEO audit and let’s figure out what’s holding your website back from indexing!