How do 404 error pages limit webpage indexing? 404 page errors are simply the urls that either no longer exist or never existed on the website. The problem with having 404 pages is that, if these webpages are still part of the website and maybe just the url changed, but no 301 redirect was setup. Then searchbots will think that webpage really did disappear. That will cause the searchbot to not crawl the links on that webpage anymore, thus limiting the amount of pathways a searchbot has to the webpages linked out on that webpage.

How Do You Find Website 404 Page Errors?

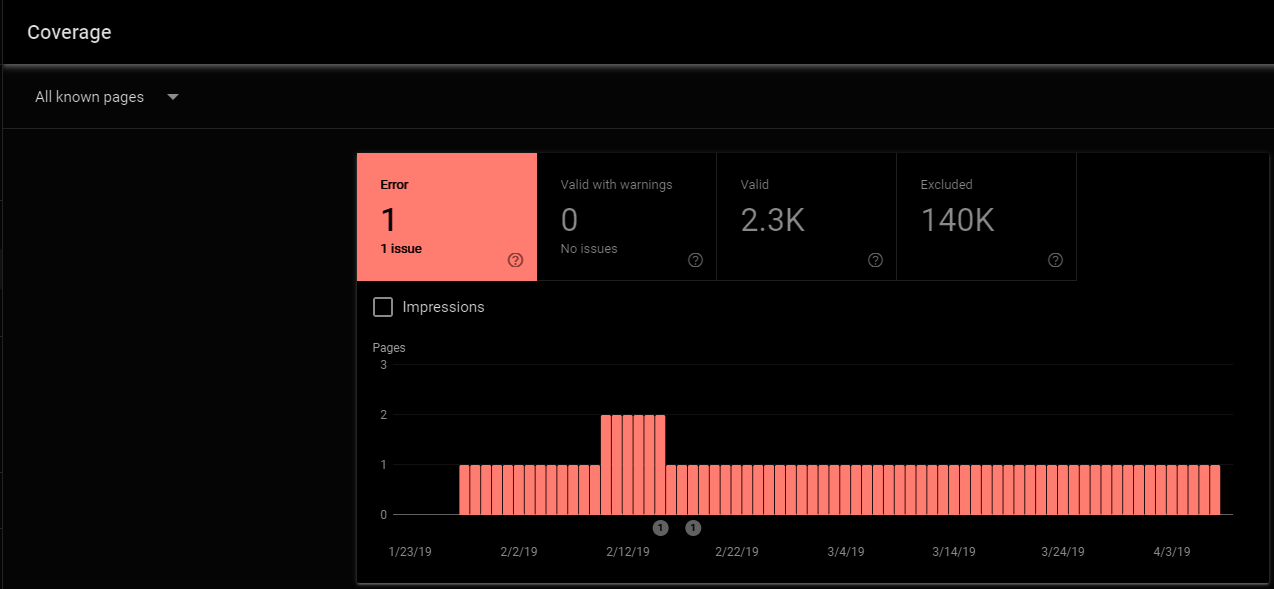

Google Search Console or Bing Webmaster Tools is one place to check for 404 page errors. There is a lot of information that can be gleamed from GSC, which makes the importance of having someone manage Google products even more crucial to website success. Google Search Console management can routinely find 404 errors and take care of redirects BEFORE any keywords are lost that would be the warning sign in the 1st place!

There are other avenues to find broken urls on websites if using GSC or Bing webmaster tools isn’t your cup of tea. Website crawling software like Screaming Frog or Xenu Link Sleuth can find broken links as well. Now the 404s found by website crawlers will only find 404 webpages currently on the website, and will not find any older 404s since it’s crawling the live website. So even if GSC and Bing Webmaster Tools aren’t your cup of tea, you won’t get all the possible 404 webpages fixed unless you use search engine tools.

There are two types of 404 page errors you’ll see in GSC

- 404 website errors of pages that are current – These are webpages that exist on the website currently or used to at one point in history.

- Old and 404 searchbot crawling artifacts – These are urls searchbots either found from coding syntax errors or crawl anomalies.

Why Worry About Webpage Indexing?

Webpages Lose Keywords When They Are 404 Errors

If a website has a lot of 404s, then that means that website isn’t showing searchbots the proper urls to webpages, so basically that website is stuck in time from the last time Google indexed the website, until the new webpage urls are found. This normally leads to a loss in keyword rankings since other websites are now ranking better, just by allowing searchbots to find the content easier. Then any backlinks that might be linked to the webpages that have been 404ing, and more web traffic and keyword rankings will be lost. Once a 301 redirect is setup, a lot of what was lost should return, however, it’s not always sooner than later.

Crawl Budget Is Slowed Due To Searchbot Deadends

It’s as simple as 404 webpages will cause searchbots not to keep crawling. Search engines have a HUGE job ahead of them, so big in fact, that it’s currently not possible to crawl and index the entire internet. So to be efficient, searchbots will come back to a website once a 404 page is found. Since the way searchbots crawl webpages, is via the links on each webpage crawled, when a 404 webpage is crawled, no further links are found, resulting in the crawler moving onto the next website on the list. The searchbot will come back to finish crawling, but on it’s timescale, not the websites. So new content or redirects setup, don’t get seen until searchbots crawl the webpage again or the webpage is requested to be indexed again.

404 Pages Take A While To Regain Previous Status

Normally, it’s faster to see negative results than positive results.It’s a slippery slope to let a website go untouched for too long, having a website is not a ” set it and forget it ” concept, it’s something where a ” constant gardener ” approach is needed, since a website is more like a living thing, in need of ongoing maintenance. Everything from 404s, 301 redirects, keyword relevancy and content matter, but also the technical aspects that aren’t talked about too much, since much of SEO is direct and indirect, it’s important to look at SEO variables from all angles.

Poor Internal Linking Makes 404 Pages Dangerous

If that webpage was the only pathway to deeper webpages, then searchbots will not find the deeper webpages. Websites with little to no internal linking setup, can result in 404 errors causing more than just that 404 page to go missing. If that webpage that error’d out had links to deeper webpages and those webpages are linked to anywhere else, then searchbots have just lost the ability to find those deeper pages. Resulting in not just kw loss for that 404 page, but all the other webpages not able to be crawled. To search engines, those webpages are no longer on the internet.

This is why it’s vital to have a well defined internal linking strategy, the more pathways searchbots have to webpages, the more likely those webpages will be indexed, it’s that simple. Website users will appreciate the extra contextual linking and can make better connections to website content, which can lead to higher goal completion. This can be pretty difficult to find out if a skilled SEO isn’t knowledgeable of such an occurrence. This is why website indexing optimization audits should be at least on a monthly scheduled routine just to make sure all 404s are redirected, unless they really have no value and need to be forgotten by search engines.

Is Your Website Loosing Keywords From 404 Errors?

Contact SEOByMichael to schedule an SEO audit and find all 404 errors and redirect them to start regaining keywords lost! Let’s develop a website success strategy!